Zookeeper workflow

May 26, 2021 Zookeeper

Table of contents

Once the ZooKeeper collection starts, it waits for the client to connect. /b10> The client connects to a node in the ZooKeeper collection. /b11> It can be a leader or follower node. /b12> Once a client is connected, the node assigns a session ID to a particular client and sends an acknowledgement to that client. /b13> If the client does not receive an acknowledgement, it will attempt to connect to another node in the ZooKeeper collection. /b14> Once connected to the node, the client sends a heartbeat to the node at regular intervals to ensure that the connection is not lost.

-

If the client wants to read a particular znode, it sends a read request to a node with a znode path, and the node returns the requested znode by getting it from its own database. /b11> To do this, read quickly in the ZooKeeper collection.

-

If the client wants to store the data in the ZooKeeper collection, the znode path and data are sent to the server. T he connected server forwards the request to the leader, who then re-issues the write request to all the following. I f only most nodes respond successfully and the write request succeeds, the successful return code is sent to the client. O therwise, the write request fails. The vast majority of nodes are called Quorum.

The nodes in the ZooKeeper cluster

There can be a different number of nodes in the ZooKeeper collection.

So, let's analyze the effect of changing nodes in the ZooKeeper workflow:

-

If we have a single node, the ZooKeeper cluster fails when that node fails. This is why it is not recommended in a production environment because it causes a "single point of failure".

-

If we have two nodes and one node fails, we don't have a majority because one of the two nodes is not a majority node.

-

If we have three nodes and one node fails, then we have the majority, so this is the minimum requirement. /b10> The ZooKeeper cluster must have at least three nodes in the actual production environment.

-

If we have four nodes and two nodes fail, it will fail again. S imilar to having three nodes, extra nodes are not used for any purpose, so it is a good idea to add odd numbers of nodes, such as 3,5,7.

We know that the write process is more expensive than the read process in the ZooKeeper collection because all nodes need to write the same data in the database. /b10> Therefore, it is better to have a small number of nodes (for example, 3,5,7) for a balanced environment than to have a large number of nodes.

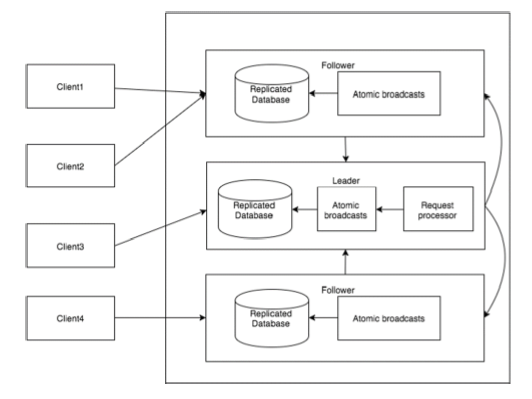

The following illustration describes the ZooKeeper workflow, and the following table illustrates its different components.

| Component | Describe |

|---|---|

| Write (write) | The write process is handled by the leader node. L eader forwards the write request to all znode and waits for znode's reply. /b11> If half of the znode replies, the write process is complete. |

| Read (read) | Reads are performed internally by znode for a specific connection, so there is no need to interact with the cluster. |

| Copy database (replicated database) | It is used to store data in zookeeper. /b10> Each znode has its own database, and each znode has the same data every time with the help of consistency. |

| Leader | Leader is Znode, which handles write requests. |

| Followers | Follower receives write requests from the client and forwards them to leader znode. |

| Request processor | Exists only in the leader node. /b10> It manages write requests from the follower node. |

| Atomic Broadcasts | Responsible for broadcasting changes from the leader node to the follower node. |