SQL Server AlwaysON goes from getting started to advanced - storage

May 30, 2021 Article blog

Table of contents

This section teaches about SQL Server storage, which is shorter than other sections. T his section provides some recommendations for using storage in a clustered or non-clustered system process. O f course, the focus is on the selection and configuration of storage in a standard AlwaysOn available group configuration process.

AlwaysOn's deployment was first built on a Windows Server Failover Cluster (WSFC). A nd each server typically has a separate instance of SQL Server. I n addition, each server uses its local storage to hold database files (data files, log files, backup files, and so on) for separate SQL Server instances. A lthough all fire companion nodes belong to the same cluster, there is no hard disk-based witness or failover instance, and there are no requirements for shared storage. T his avoids the risk of shared storage single point failures in the FCI. H owever, alwaysOn available groups can use FCI as an available copy. T his not only re-introduces the risk of a single point of failure, but also increases the complexity of the cluster to the node.

Now let's take a look at the core of the storage system:

Locally stored

Networked

Here's a closer look:

Local Attached Storage:

In this mode, the local storage is connected directly to the server, the hard disk is plugged directly into the hardware backplane, and then connected to the server's motherboard. O lder configurations may include an extended RAID controller that will be connected to the PCI bus via a 68/80 pin cable.

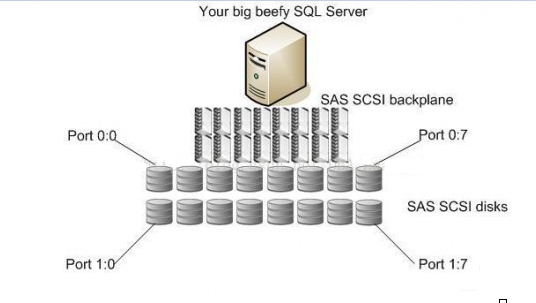

The following diagram is a typical local storage diagram. T his is relatively low in path breaks and complexity and provides fast hard drive access. The backplane has an input-output BIOS that can be used to control hard drive redundancy across raid arrays across local hard drives, but due to hardware server limitations, a maximum of 16 hard drives are typically available.

This is a separate schematic of a typical node without networked storage, and in WSFC, no separate storage is shared with other nodes. This also makes it unnecessary to replicate the storage in the physical location process of the pointing node.

Network Storage:

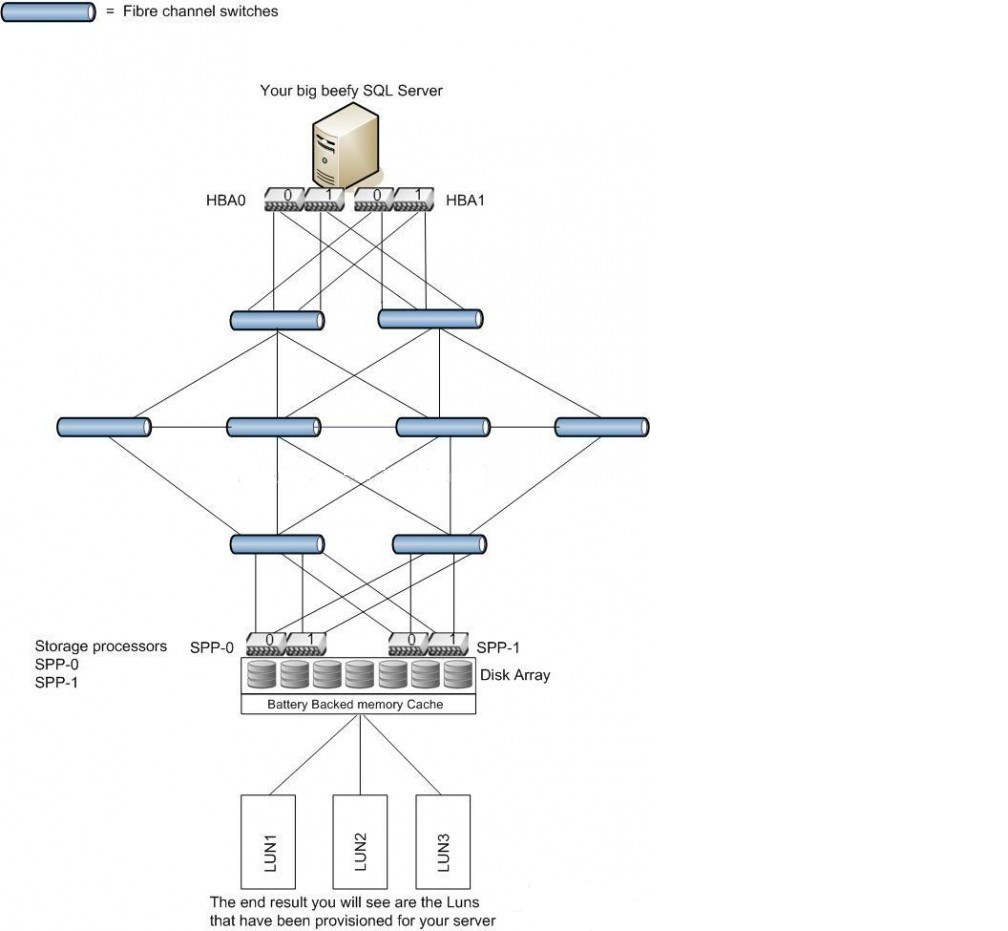

Networked storage can be provided as a resource to multiple computer systems. T here is a central repository that makes it easier to manage these hard drives by dropping the contact points of multiple arrays of each server. A s the following illustration shows, there are often many servers in a system that are connected over a fibre Channel(FC) network, often referred to as Fabric. T he computer passes through a Host Bus Adapter (HBA card, the host bus adapter is a network-to-switch, is a board that plugs into a computer or mainframe), and in fact the HBA card is similar to a network card.

Servers can also be interconnected over an iSCSI network, which is relatively new but bandwidth-constrained (1Gbps). I t runs on a standard, isolated TCP-IP network. S ervers typically use private network cards, which are used only for iSCSI and TCP traffic to reduce the load, meaning that iSCSI traffic control is allocated. M odern iSCSI is already able to process bandwidth data up to 10Gbps. O ne of the benefits of the iSCSI configuration is that it is more economical for traditional FC networks. But that's not always the case.

When there are many servers that send requests to the storage process and receive results from them, you can quickly discover how much traffic is generated in the FC network. A s with TCP/IP networks, you'll find that FC networks are congested. T he network in the storage area then causes performance problems as a result. I n a complex SAN configuration, multiple switches are connected to a large number of network cables and additional power requirements. A s shown above, you can see that the path and complexity of data activity are significantly larger in such cases.

Making I/O requirements in such long paths and complex routes consumes many events and other eliminations. W hat can such systems offer with respect to consolidated storage? T his type of storage makes it easier to provision and deliver resources to a wide range of data. A nd then it's like virtualization, not every entity can use it.

Storage for this type of pattern is also often used in SQL Server's FCI, where LUNs are scratched out of the disk array in large numbers. T he disadvantage here is that the array may be formatted at a block size of 128KB. T his size is not optimal for SQL Server. T he advantage is that when properly configured, storage requirements can be close to the end array. B ecause requirements can be generated directly in a high-speed memory buffer, then cached data is brushed to the hard disk at the appropriate point in time to degrade the performance impact. I n the event of a power outage, the backup power supply also flushes the cached data to the hard disk to avoid data loss.

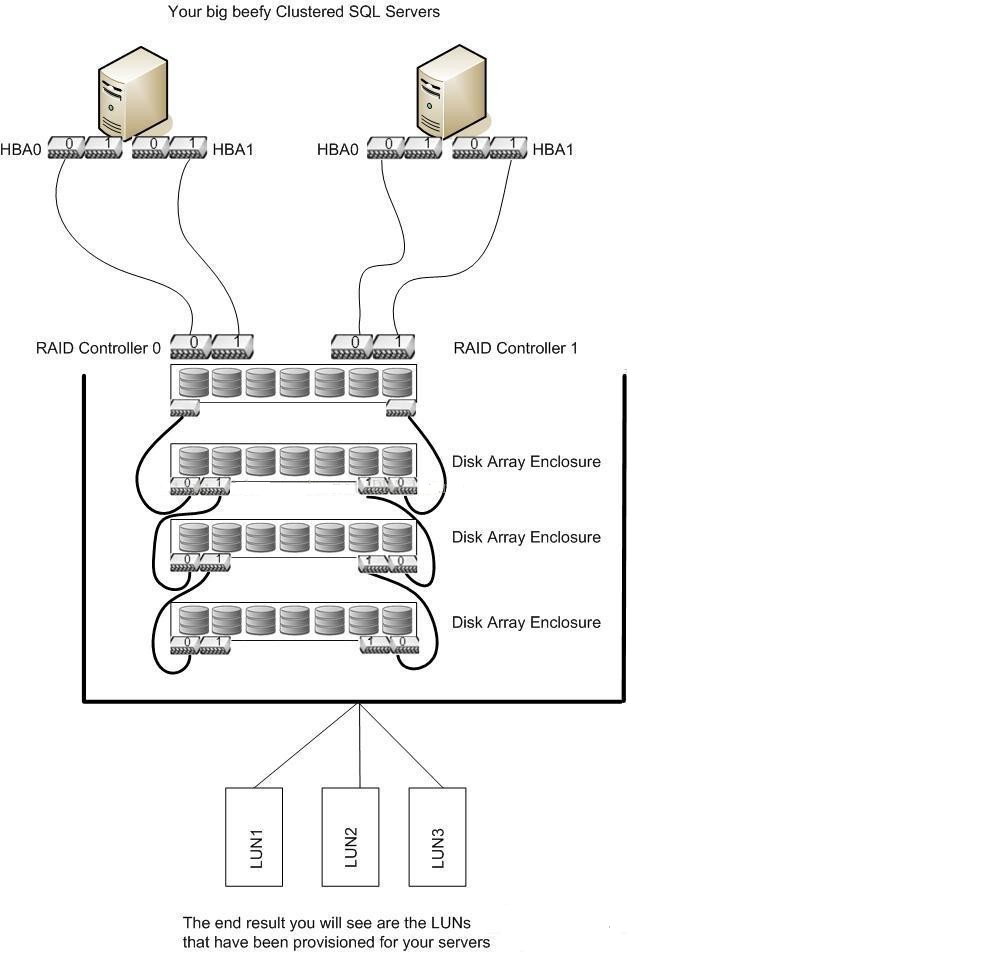

There is also a network storage that can be used to share storage in highly available nodes while avoiding the loss of multiple host connections. T hese types of storage, called Direct Attached Storage (DAS), are designed to use private fiber-based connections that can essentially be attributed to localization. Here is a diagram of a typical private high-available storage configuration:

In this scenario, if you want to create a private, highly available cluster, it's slightly better than local storage. S ome storage vendors provide equipment that is connected over fiber and can have up to two hosts connected to multiple paths in highly available scenarios. M ultiple array enclosures can increase the amount of storage available by the way.

This type of storage can also be used in SQL Server FCI. T his approach is suitable for a few nodes in a small or simple cluster in a particular environment to share storage. Y ou may have noticed that the LUNs approach in the image above is a box, because not all Windows systems have separate physical arrays at the bottom of their logical hard drives. T he scenario shown above is also the most common in a configuration where the disk is set to a larger array.

Imagine a big cake. O r in such cases, an array created from a physical hard disk pool. Cut a piece of cake or scratch a LUN from the array for Windows as a logical hard drive.