h5 seconds to open the scheme

May 31, 2021 Article blog

Table of contents

1. Commonly used acceleration methods

2. Straight out of the offline package cache

3. VasSonic of the client agent

The boss said, Is the page opening too slowly? P age loading performance is not up to standard? Below we take a look at the various large factories and teams of the second open classic scheme, is there a suitable for you to explore?

This page lists and summarizes

hybrid

second-open scenarios that tend to be combined with clients, and pure front-end scenarios are partially mentioned.

Commonly used acceleration methods

When it comes to h5 performance optimization scenarios, it's a cliché that the usual web optimization approach revolves around resource loading and htm rendering. T he former is for the first screen and the latter for the interactive. I n resource optimization, our overall direction is around smaller resource packs, such as about common: compression, subcontracting, unpacking, dynamic loading packages, and picture optimization. The overall orientation on html rendering is faster presentation of content, such as through cdn distribution, dns parsing, http caching, data pre-requests, data caching, and first-screen optimization killers - straight out, and so on.

These programs are a must-test point in a variety of front-end interviews, but also as a front-end development, when encountering performance problems, need to solve performance problems the most important and basic ideas. What kind of scheme should be used depends on the actual development needs, priorities, comprehensive costs, and input-output ratio.

As client technologies such as react-native, weex, and flutter continue to impact traditional hybrids, hybrids are evolving and accelerating in a direction that makes them comparable to natives. Here's a summary of some of the scenarios that have emerged in hybrid development, in no order.

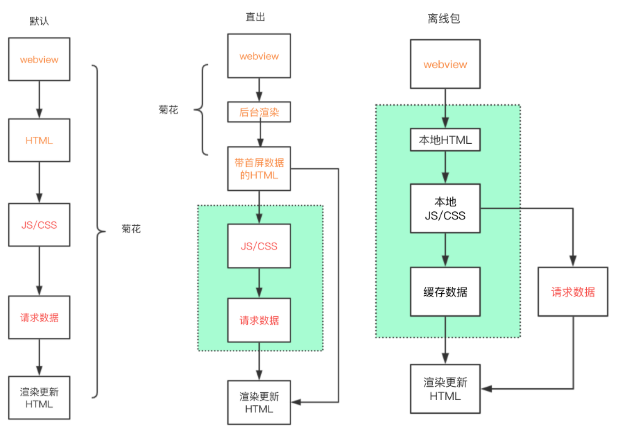

Straight out of the offline package cache

In order to optimize the first screen, most mainstream pages will be rendered through the server, spit out html files to the front end, solve the problem of chrysanthemum for a longer period of time, different types of mainstream frameworks, there will be a set of background rendering schemes, such as vue-server-renderer, react-dom/server and so on. Straight out eliminates the time for front-end rendering and ajax requests, and while straight out is well optimized for a variety of caching strategies, loading html still takes time.

Offline package technology provides a good solution to the problem of the time it takes for the html file itself to load. T he basic idea of offline packages is to block url uniformly through webview, map resources to local offline packages, detect version resources when updated, download, and maintain resources in the local cache directory. For example, Tencent's webso and Alloykit's offline package scheme.

Offline package strategy is more mature in many large factories, it is relatively transparent to the web side, very intrusive.

VasSonic of the client agent

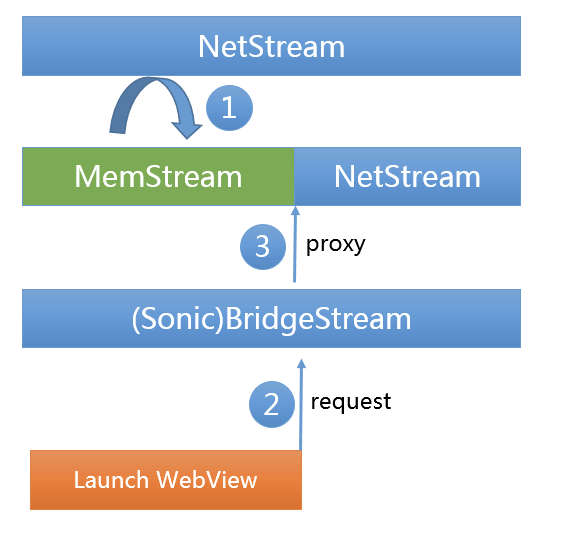

In hybrid h5, between the user's click and the see page, there is also the time when the webview is initialized to request resources, and the process here is serial, and there is room and possibility to optimize for a more extreme experience. VasSonic is a lightweight hybrid framework developed by Tencent's value-added member team to support the offline package strategy mentioned above, and further, it has made the following optimizations:

- Webview initializes and requests parallel resources through client agents

- Stream intercept requests and render as they load

- Dynamic caching and incremental updates are implemented.

Simply put, how it is done, client agent resource request parallel nothing to say, is to create a webview through the client agent before the network connection, request html, and then cache up, waiting for the webview thread to initiate html resource request, the client intercepts, the cached html back to the webview.

How do dynamic caches and incremental updates work?

VasSonic divides the content of html into html templates and dynamic data, and how to distinguish between the two types, it defines its own set of html comment markup rules, which are dynamic data and which are template data by label. T hen expand the http header and customize a set of conventions for requesting background. When webview initiates an http request, it carries the id of the page content in the past, and after background processing determines whether the client needs to update the local data, if so, stitches the cached html template into the new html, and finally calculates the data difference part, which is called back to the page by js for layout refresh.

The graph source network

VasSonic's overall thinking and effect is very good, especially for most web scenarios, usually our template changes less, most of the data part of the change, can be very good through local refresh to achieve the second-open effect. For first-time loading, concurrent requests and webview creation provide good performance gains and seamless support for offline package policies.

But VasSonic defines a special set of annotation markers and expands the head, requiring a retrofit of the front and rear ends, including back office, which is very intrusive to the web and can be very expensive to access and maintain.

PWA plus straight out and preloaded

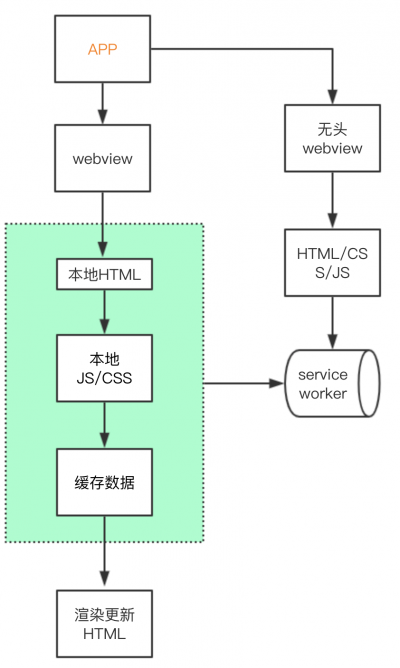

Whether it's offline package technology or webview proxy requests, it's a very large front-end intrusion, and pwa, as a web standard, can accelerate and optimize load performance through web-only scenarios.

First, pwa's ability to cache common pictures, js, and css resources through cacheStorage. On the other hand, in traditional http caches, we don't typically cache html because once a page is set too long max-age, the user will always see the old when the browser cache expires.

If you use pwa's html page, can I cache it directly? Because pwa fine-tunes the control cache, the answer is yes.

For straight out html, we can work with pwa, cache files straight out of the background, cache to cacheStorage, take precedence from the local cache the next time we request it, and initiate a network request to update the local html file.

But in hybrid's h5 app, loading resources for the first time is still time-consuming, and we can preload a javascript script by supporting it on the app side, pulling pages that require pWA cache, and completing the cache ahead of time.

For non-straight-out pages, we still can't avoid the problem of browser rendering html time, how can we reduce the time here?

Here are two points that, for the first time, can always only be loaded in advance, so the preload script on the side above still works; The browser gets the data and renders the html, which caches the html page into cacheStorage via the outerHTML method, takes precedence over the second access from the local, and initiates an html request to decide whether an update is needed by comparing the differences in which the unique identity is identified.

Pwa has a range of alternatives to offline package policies, with the benefit of being a web standard for H5 pages that normally support service-worker. Where compatibility issues are allowed, it is recommended that the main addition.

NSR rendering

THE GMTC2019 GLOBAL BIG FRONT-END TECHNOLOGY UC TEAM MENTIONED A 0.3-SECOND "FLASH" SCENARIO. NSR is the front-end version of SSR, which is very enlightening.

The central idea is to enable a JS-Runtime with a browser, render downloaded html templates and pre-retrieved feed stream data in advance, and then set html to memory-level MemoryCache for a point-and-see effect.

NSR distributes the SSR rendering process to each user's side, reducing background request pressure while further speeding up page opening to the extreme.

The problem is that data pre-picking and pre-rendering bring additional traffic and performance overhead, especially traffic, and how to predict user behavior more accurately and increase hit rates is very important. NSR-like scenarios are also being explored step by step.

Client PWA

In the actual testing, and with the browser team of students to understand and communicate, service-worker in the webview implementation performance is not as good as expected. A fter dropping sw in an item, the overall speed of large market access increased by about 300ms. T his presents a new set of ideas and challenges for hybrid applications, enabling a basic set of service-worker apis on the customer? T his is compatible with web standards. It's just an idea and an idea, and there are a lot of questions to explore, such as the specific performance status of webview sw, future support, self-fulfilling costs, and ultimately the effect and value.

Small program

Small program ecology has been very mature, the major factories have also launched their own platform of small programs, domestic manufacturers are constantly trying to promote the MiniApp w3c standard. W hether from loading speed or page fluency small programs are higher than H5 pages, the reason is that by architecturally normalizing and constraining development, the small program internally separates webview rendering from js execution, and then through offline packages, page splitting, preloaded pages and a series of optimization means, so that the small program naturally has a large number of H5 optimized effects, at the expense of web flexibility. But for hybrid development, it is a very good direction to support this small program environment through the underlying primary client, and then a large number of business logic to adopt small program scenario development to achieve the effect of iteration speed and performance merger.

knot

This paper mainly summarizes these days a large number of reading combed more than a dozen articles about the second open and some recent thinking and practice, from which some representative programs are extracted. Regardless of the type of scenario, the overall idea and direction of discovering it is:

- Reduce intermediate links throughout the link. For example, change the serial to parallel, including the internal execution mechanism of the small program.

- Preload, pre-execute as much as possible. For example, from data pre-retrieval, to page pre-retrieval rendering and so on.

Any conversion comes at a cost, and acceleration is essentially swapping more networks, memory, and CPU for speed and space for time.

reference:

- Baidu H5 first screen optimization practice

- Tencent hand Q VasSonic seconds open

- UC information flow "flashes away" optimization practices

- Headline H5 Seconds Open Scheme

Related reading:

Author: flyfu wang

Source: AlloyTeam