The interviewer's 11 favorite Redis interview questions, I've sorted them out for you

May 31, 2021 Article blog

Table of contents

1. Tell me what the basic data types are for Redis

3. So why did Redis 6.0 then switch to multithreaded?

4. Do you know what a hotkey is? How to solve the hotkey problem?

5. What is cache breakdown, cache penetration, cache avalanche?

6. What are Redis' out-of-date policies?

7. So what about regular and lazy keys that haven't been removed?

8. What are the persistence methods? What's the difference?

9. How do I achieve the high availability of Redis?

Tell me what the basic data types are for Redis

- Strings: Redis is not represented directly by traditional strings in the C language, but rather by implementing an abstract type called simple dynamic string SDS. Strings in the C language do not record their own length information, while SDS saves length information, reducing the time it takes to get string length from O(N) to O (1), while avoiding buffer overflows and reducing the number of memory redistributions required to modify string lengths.

- Linkedlist:redis list is a two-way ringless list structure, many publishing subscriptions, slow queries, monitor functions are implemented using the linked list, each list node is represented by a listNode structure, each node has a pointer to the front and rear nodes, while the head node's front and rear nodes point to ULLNN.

- Dictionary hashable: An abstract data structure used to hold key-value pairs. Redis uses the hash table as the underlying implementation, each dictionary has two hash tables for normal use and rehash use, hash table uses the chain address method to resolve key conflicts, is assigned to the same index location of multiple key value pairs will form a one-way list, in the hash table expansion or shrinkage, in order to service availability, the rehash process is not a one-time completion, but progressive.

- Jump table skiplist: Jump table is one of the underlying implementations of an ordered collection, and jump tables are used in the internal structure of implementing ordered collection keys and cluster nodes in redis. T he redis jump table consists of zskiplist and zskiplistNode, zskiplist is used to hold jump table information (header, tail node, length, etc.), zskiplistNode is used to represent table jump nodes, each jump table is a random number of 1-32, in the same jump table, multiple nodes can contain the same score, but each node's member object must be unique, the node is sorted by the value size, If the scores are the same, they are sorted by the size of the member object.

- Integer collection inset: A collection abstract data structure used to hold integer values, with no duplicate elements and an array underlying implementation.

- Compression list ziplist: A compressed list is a sequential data structure developed to conserve memory, and it can contain multiple nodes, each of which can hold an array of bytes or integer values.

Based on these underlying data structures, redis encapsulates its own object system, including string object stringing, list object list, hash object hash, collection object set, ordered collection object zset, each object uses at least one basic data structure.

Redis increases flexibility and efficiency by encoding the encoding properties of objects, which are automatically optimized based on different scenarios. The codes for the different objects are as follows:

- String object string: int integer, simple dynamic string encoded by embstr, raw simple dynamic string

- List objects list:ziplist, linkedlist

- Hash objects hash:ziplist, hashtable

- Collection object set: intset, hashtable

- Ordered collection objects zset:ziplist, skiplist

Why is Redis so fast?

Redis is very fast, and a single redis can support concurresions of more than 100,000 per second, which is dozens of times more powerful than mysql. There are several main reasons for the speed:

- It is based entirely on memory operations

- C language implementation, optimized data structure, based on several basic data structure, redis do a lot of optimization, performance is very high

- With a single thread, there is no contextual switching cost

- IO multiplexing mechanism based on non-blocking

So why did Redis 6.0 then switch to multithreaded?

Redis does not completely abandon single threads, redis uses a single-threaded model to handle client requests, just multithreads to handle read-write and protocol resolution of data, execute commands, or use single threads.

This is done because the performance bottleneck of redis lies in the network IO, not the CPU, and using multiple threads can improve the efficiency of IO read and write, thereby improving the performance of redis as a whole.

Do you know what a hotkey is? How to solve the hotkey problem?

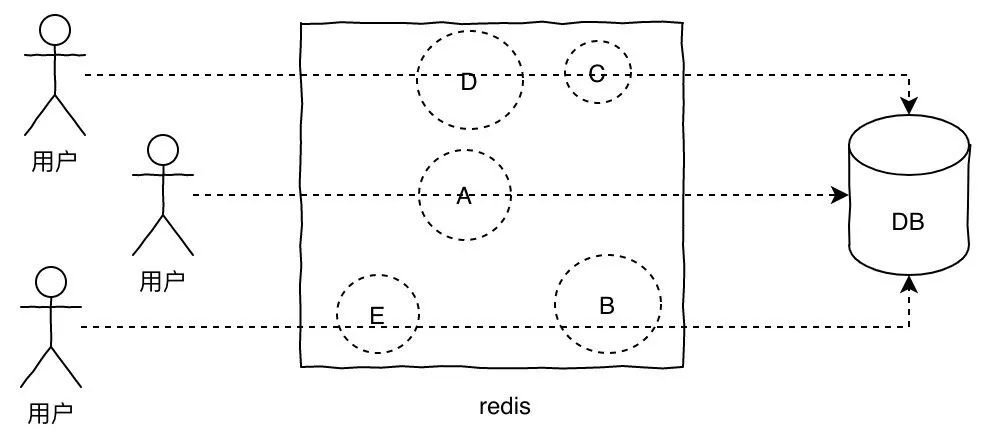

The so-called hotkey problem is that suddenly hundreds of thousands of requests to access a particular key on redis can cause traffic to be too concentrated to reach the physical network card limit, causing the redis server to go down and trigger an avalanche.

Solutions for hotkey:

- Spread the hotkey to different servers in advance to reduce stress

- Add a secondary cache, load hot key data into memory ahead of time, and if redis goes down, go to the memory query

What is cache breakdown, cache penetration, cache avalanche?

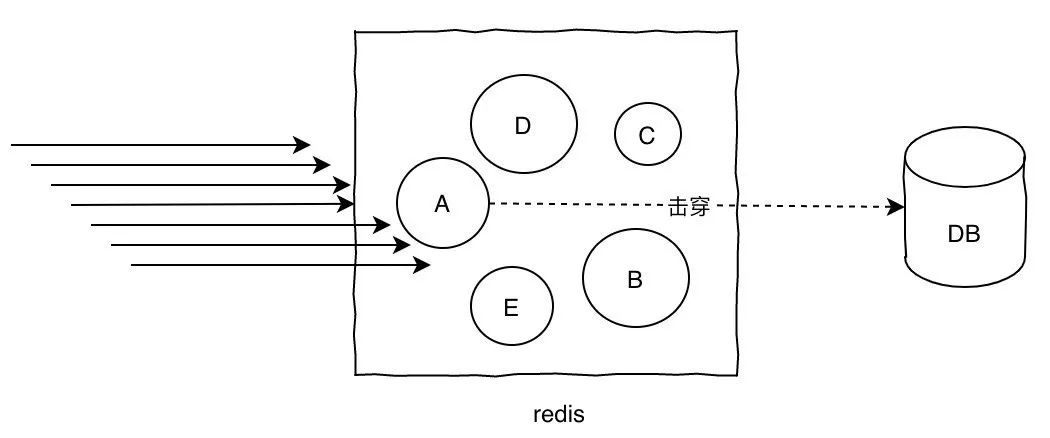

Cache breakdown

The concept of cache breakdown is that a single key concurrent access is too high, and when it expires, all requests are hit directly on the db, which is similar to the hotkey problem, except that the point is that expiration causes the request to hit the DB altogether.

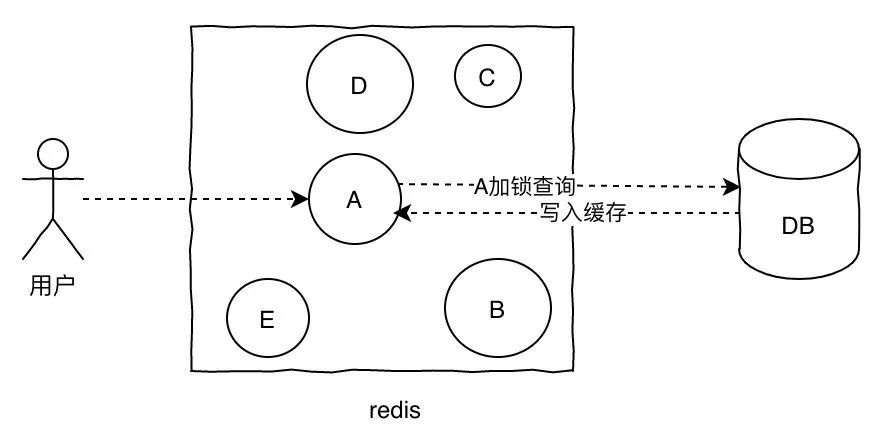

solution:

- Lock update, such as request query A, found that there is no cache, A this key lock, while going to the database to query data, write to the cache, and then return to the user, so that later requests can get data from the cache.

- Write an expiration time combination in value to prevent this by constantly refreshing the expiration time in an asynchronous manner.

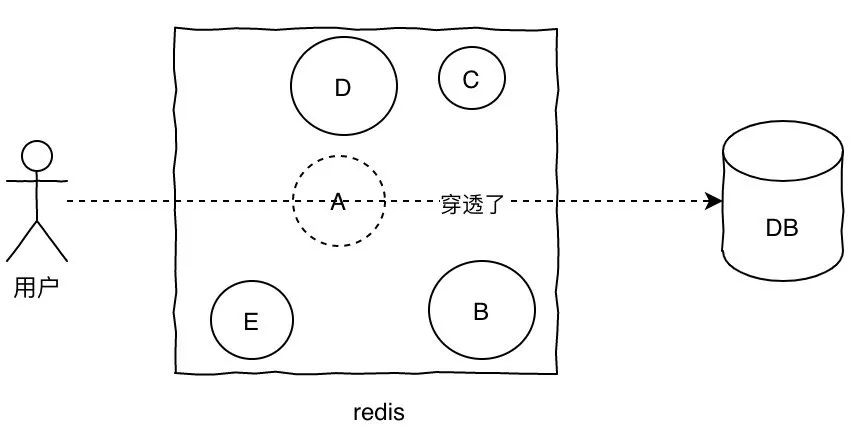

Cache penetration

Cache penetration is when a query does not have data in the cache, and each request hits a DB as if the cache did not exist.

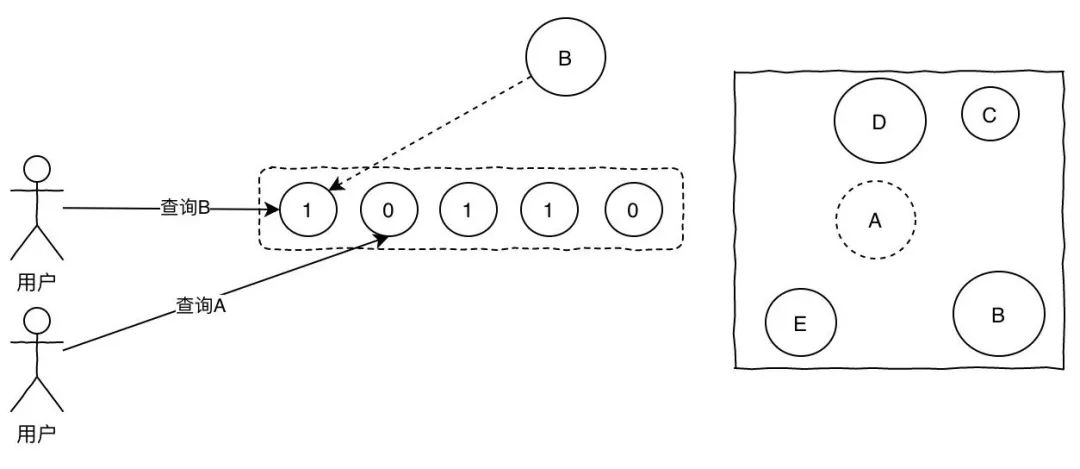

For this problem, add a layer of blond filter. The principle of the Blom filter is that when you store data, it is mapped to K points in a single-digit group through the hash function, and they are set to 1.

This way, when the user comes to query A again, and A has a value of 0 in the Bloem filter, returns directly, there is no breakdown request to hit db.

Obviously, one of the problems with using the Blom filter is misjudgment, because it is an array in itself, and there may be multiple values falling to the same position, so theoretically as long as our array is long enough, the lower the probability of misjudgment, the better the problem will be based on the actual situation.

Cache an avalanche

When a large-scale cache failure occurs at some point, such as when your cache service goes down, a large number of requests come in and hit the DB directly, which can cause the entire system to crash, called an avalanche. The problem with avalanches and breakdowns and hotkeys is that he means that large caches are out of date.

Several solutions for avalanches:

- Set different expiration times for different keys to avoid expiring at the same time

- Limiting the flow, if redis down, can limit the flow, to avoid the same time a large number of requests to crash DB

- Secondary cache, with hot key scheme.

What are Redis' out-of-date policies?

Redis has two main expiration deletion policies

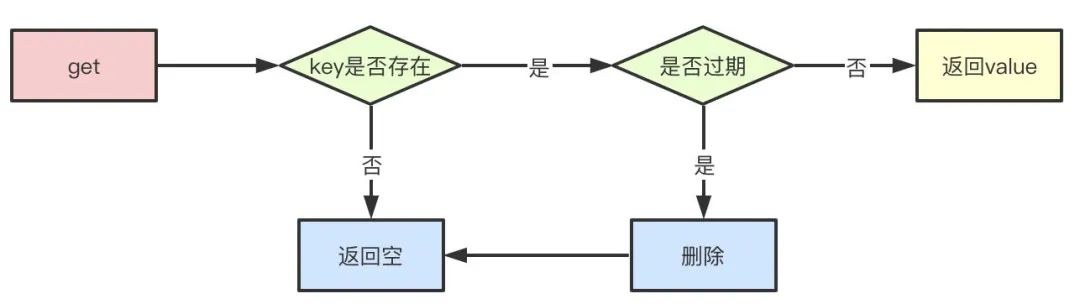

Lazy deletion

Lazy deletion refers to the detection of key when we query key, and deletion if the expiration time has been reached. Obviously, one of his drawbacks is that if these expired keys aren't accessed, he can't be deleted and he's been using up memory.

Delete periodically

Periodic deletion refers to redis checking the database every once in a while to remove the expired key inside. Since it is not possible to poll all keys to delete them, redis randomly takes some keys at a time to check and delete them.

So what about regular and lazy keys that haven't been removed?

Assuming that redis doesn't delete them every time they periodically randomly query key, and if they don't make queries, they will cause them to remain in redis and can't be deleted, and that's when they'll go to redis' memory retirement mechanism.

- volatile-lru: Removes the least recently used key from the key that has set the expiration time for elimination

- volatile-ttl: Removes the key that is about to expire from the key that has set the expiration time

- volatile-random: Randomly select key elimination from key that has set an expiration time

- allkeys-lru: Select the least recent use from key for elimination

- Allkeys-random: Randomly select key from key for elimination

- Noeviction: When memory reaches the threshold, the new write operation reports an error

What are the persistence methods? What's the difference?

Redis persistence schemes are divided into RDB and AOF.

RDB

RDB persistence can be performed manually or periodically depending on the configuration, and its purpose is to save the database state at a point in time to the RDB file, which is a compressed binary that restores the state of the database at some point in time. Because the RDB file is saved on the hard disk, even if redis crashes or exits, it can be used to restore the state of the restored database as long as the RDB file exists.

RDB files can be generated through SAVE or BGSAVE.

The SAVE command blocks the redis process until the RDB file is generated, and it is clearly inappropriate that redis cannot process any command requests while the process is blocked.

BGSAVE is a child process that is then responsible for generating the RDB file, and the parent process can continue to process command requests without blocking the process.

AOF

Unlike RDBs, AOF records the state of the database by saving write commands executed by the redis server.

AOF implements the persistence mechanism by appending, writing, and synchronizing three steps.

- When AOF persistence is active and the server executes the write command, the write command is appended to the end of the aof_buf buffer

- Before each event loop ends on the server, the flushAppendOnlyFile function is called to decide whether to save the contents of the aof_buf to the AOF file, which can be determined by configuring the appendfsync.

always ##aof_buf内容写入并同步到AOF文件

everysec ##将aof_buf中内容写入到AOF文件,如果上次同步AOF文件时间距离现在超过1秒,则再次对AOF文件进行同步

no ##将aof_buf内容写入AOF文件,但是并不对AOF文件进行同步,同步时间由操作系统决定If not set, the default option will be everysec, because while always is the most secure (write commands for an event loop will only be lost once), it is less performing, while the everysec mode can only lose 1 second of data, while the no mode is similar to everysec, but all write command data after the last synchronization of the AOF file is lost.

How do I achieve the high availability of Redis?

To achieve high availability, a machine is certainly not enough, and redis has two options to ensure high availability.

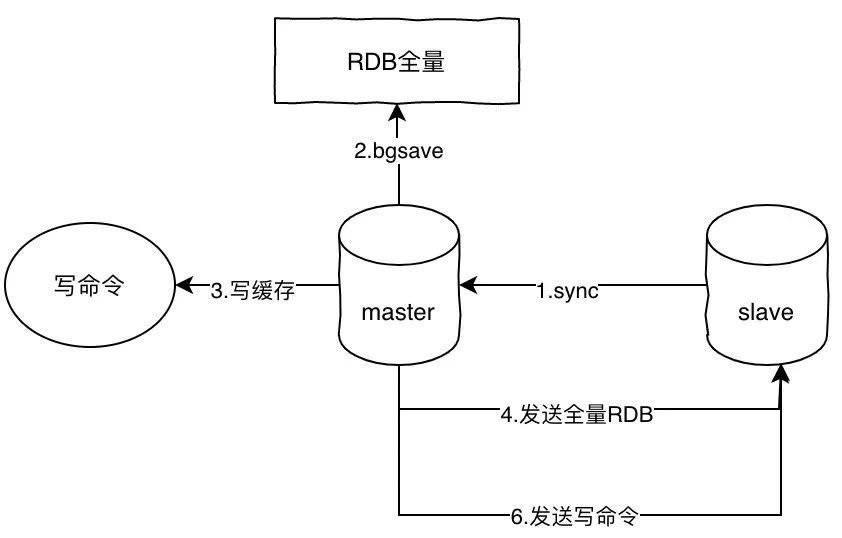

Master-from architecture

Master-from-mode is the simplest way to implement high-availability scenarios, and the core is master-to-master synchronization. The principle of master-to-master synchronization is as follows:

- Slave sends the sync command to master

- After master receives the sync, execute bgsave to generate the full amount of the RDB file

- Master records slave's write commands to the cache

- After bgsave is executed, send the RDB file to slave, slave execution

- Master sends write commands in the cache to slave, slave execution

The command I wrote here is sync, but psync has been used instead of sync since the redis 2.8 version because the sync command is very system-intensive and psync is more efficient.

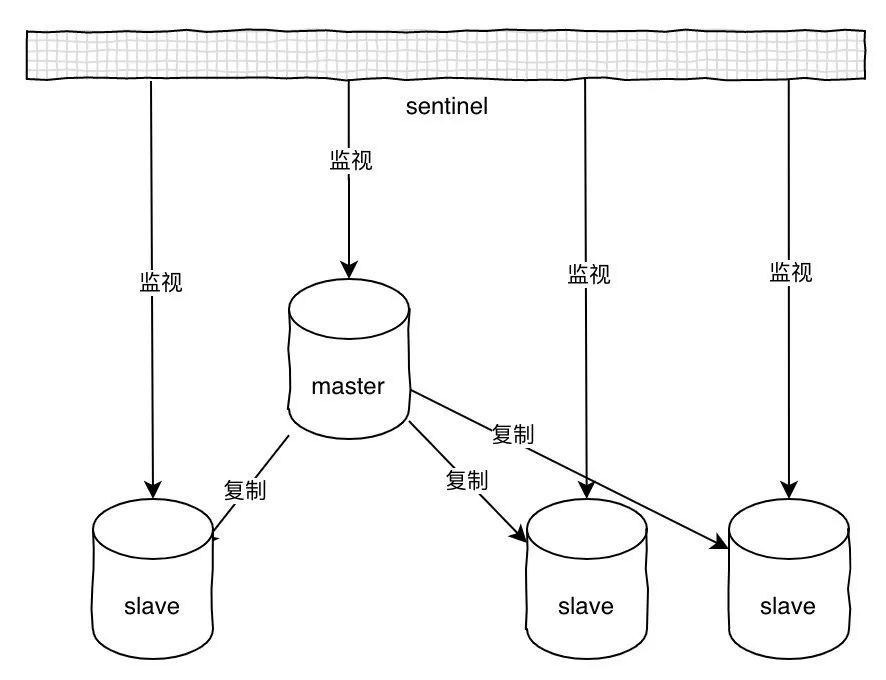

sentry

The disadvantages of the master-slave scenario are obvious, assuming master goes down, then you can't write data, then slave loses its function, and the entire architecture is not available unless you switch manually, mainly because there is no automatic failover mechanism. Sentinel's functionality is much more comprehensive than a simple master-to-master architecture, with automatic failover, cluster monitoring, message notifications, and more.

Sentinel can monitor multiple primary slave servers at the same time, and automatically promote a slave to master when the monitored master goes offline, and then the new master continues to receive commands. The whole process is as follows:

- Initialize the sentinel and replace the normal redis code with the sentinel-specific code

- Initialize the master dictionary and server information, which mainly holds the ip:port and records the address and ID of the instance

- Create and master two connections, command connections and subscription connections, and subscribe to the sentinel:hello channel

- Send the info command to master every 10 seconds to get the current information for master and all the slave below it

- When it is discovered that master has a new slave, sentinel and the new slave also establish two connections, while sending info commands every 10 seconds to update the master information

- Sentinel sends ping commands to all servers every 1 second, and if a server continuously returns invalid replies during the configured response time, it will be marked as offline

- To elect the front-runner, Sentinel, needs more than half of the sentinel's consent

- The leader, Sentinel, picks one of the master slaves that have been dropped offline and converts it to master

- Let all slave copy data from the new master instead

- Setting the original master to the new master's from the server, when the original master re-replies to the connection, becomes the new master's from the server

Sentinel sends ping commands every 1 second to all instances, including the primary server and other sentinels, and determines whether they have been offline based on the response, which is called subjective downline. When judged to be subjective, other monitored sentinels are asked if more than half of the votes are considered to be offline, which is marked as an objective offline state and triggers failover.

Can you tell me how redis clustering works?

If relying on sentry can make redis highly available, and if you want to support high concurres while hosting large amounts of data, you need redis clusters. Redis clustering is a distributed data storage scheme provided by redis, which shares data through data sharding while providing replication and failover capabilities.

node

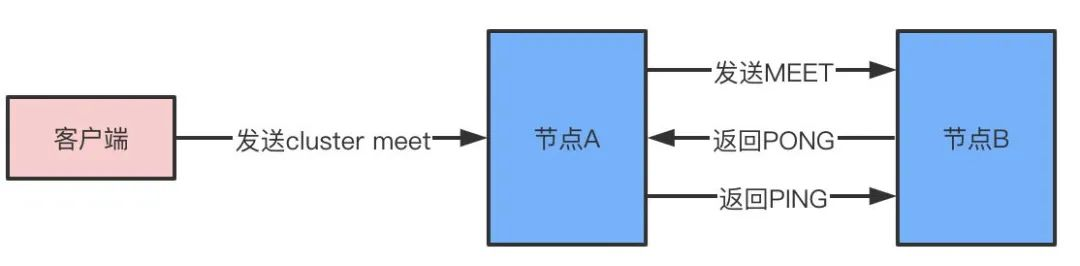

A redis cluster consists of multiple node nodes nodes, and multiple nodes are connected by the cluster meet command, and the node shakes hands:

- Node A receives the client's cluster meet command

- A Sends a meet message to B based on the IP address and port number received

- Node B receives the meet message to return to pong

- A knew that B had received the meet message, returned a ping message, and shook hands successfully

- Finally, node A will spread the information of node B to other nodes in the cluster through the gossip protocol, and the other nodes will shake hands with B

Slot slot slot

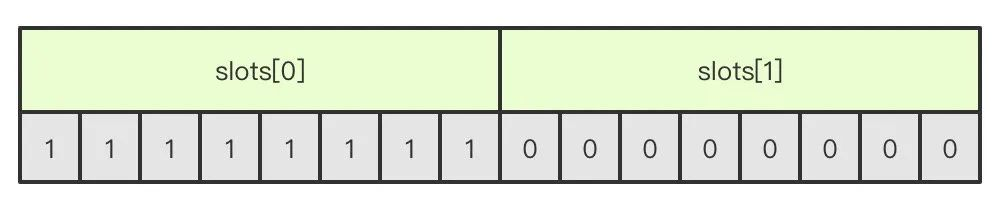

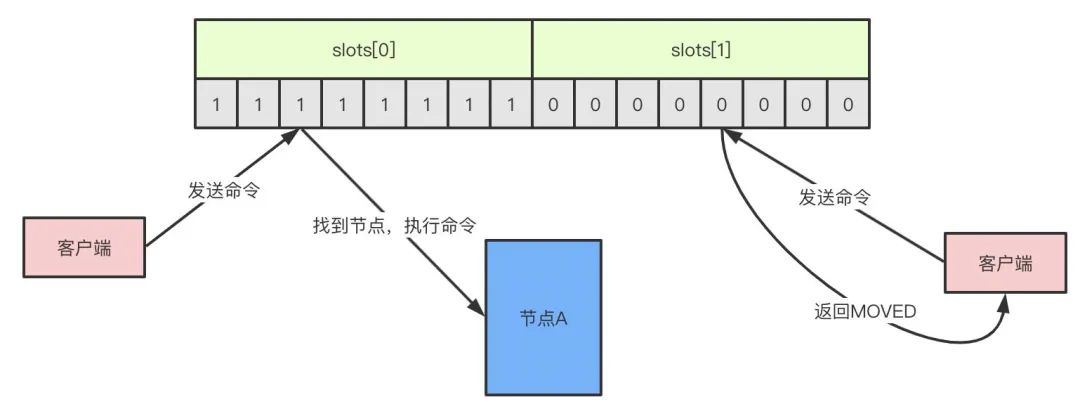

Redis through the form of cluster sharding to save data, the entire cluster database is divided into 16384 slot, each node in the cluster can handle 0-16384 slot, when the database 16384 slots have nodes in the processing, the cluster is in the online state, and therefore as long as there is a slot not processed will be processed offline state. The cluster addslots command allows slot to be assigned to the corresponding node for processing.

Slot is a single-digit group, the length of the array is 16384/8 x 2048, and each bit of the array is processed by the node with 1, 0 is not processed, as shown in the figure, the A node processes 0-7 slot.

When the client sends a command to the node, if it happens to find that slot belongs to the current node, the node executes the command and, conversely, returns a MOVED command to the client to direct the client to the correct node. (MOVED process is automatic)

If nodes are added or moved out, redis provides tools to help implement slot migration, and the entire process is completely online and does not require a stop to service.

Failover

If node A sends a ping message to node B, node B does not respond to pong within the specified time, then node A will mark node B as pfail suspected offline state, and the state of B is sent to other nodes in the form of a message, if more than half of the nodes are marked B as pfail state, B will be marked as fail offline state, at this time will fail over, priority from the replication of more data from the node to choose a primary node, And slot, which takes over the down-line node, is very similar to the Sentinel, voting on the basis of the Raft protocol.

Do you understand the Redis transaction mechanism?

Redis implements transaction mechanisms through commands such as MULTI, EXEC, watch, and so on, and the transaction execution process executes a series of multiple commands sequentially at once, and during execution, the transaction is not interrupted or other requests from the client are executed until all commands are executed. The execution of the transaction is as follows:

- The client side receives a client request and the transaction starts with MULTI

- If the client is in a transaction state, the transaction is queued and returned to the client QUUED, and the command is executed directly

- When the client EXEC command is received, the WATCH command monitors whether the key in the entire transaction has been modified, and if so, returns an empty reply to the client to indicate a failure, otherwise redis will traverse the entire transaction queue, execute all the commands saved in the queue, and finally return the results to the client

The watch mechanism itself is a CAS mechanism, the monitored key is saved to a linked list, and if a key is modified, the REDIS_DIRTY_CAS flag is opened, and the server refuses to execute the transaction.

The article comes from the public number: Science and Technology Miao, author of Science and Technology Miao

These are the 11 Redis interview questions

W3Cschool编程狮

has asked the interviewer's favorite questions, and I've put them together for you.