Posts about Spark Programming guide

Spark SQL data source

May 17, 2021 17:00 0 Comment Spark Programming guide

The data source, The data source, The data source, Spark SQL supports operating a variety of data sources through the SchemaRDD interface. A, SchemaRDD can be operated as a general RDD

Spark SQL data type

May 17, 2021 17:00 0 Comment Spark Programming guide

Spark SQL data type, Spark SQL data type, Spark SQL data type, The type of number, ByteType: Represents an integer of a byte., The range is -128 to 127, ShortType: Represents an integer of two

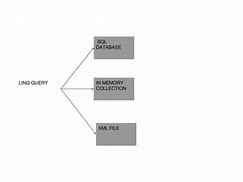

Write queries about Language-Integration

May 17, 2021 17:00 0 Comment Spark Programming guide

Write queries about Language-Integration, Write queries about Language-Integration, Write queries about Language-Integration, The queries related to language integration are experimental and now only scala is supported for the time be

Spark SQL performance tuning

May 17, 2021 17:00 0 Comment Spark Programming guide

Spark SQL performance tuning, Spark SQL performance tuning, Spark SQL performance tuning, For some workloads, you can improve performance by caching data in memory or opening some lab options., Cache data in me

Spark SQL starts

May 17, 2021 17:00 0 Comment Spark Programming guide

Spark SQL starts, Spark SQL starts, Spark SQL starts, The entry point for all related features in Spark is, the SQLContext class or, its sub-classes, and all you need to create an SQLCon

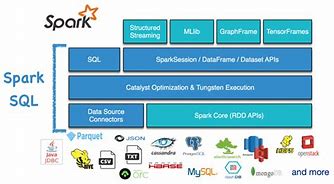

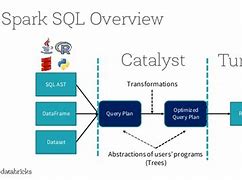

Spark SQL

May 17, 2021 17:00 0 Comment Spark Programming guide

Spark SQL, Spark SQL, Spark SQL, Spark SQL allows Spark to perform relationship queries represented by SQL, HiveQL, or Scala. A, t the heart of this module is a new type of

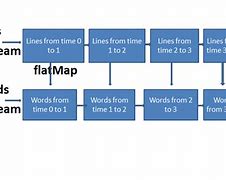

Spark Streaming fault tolerance semantics

May 17, 2021 17:00 0 Comment Spark Programming guide

Spark Streaming fault tolerance semantics, Spark Streaming fault tolerance semantics, Spark Streaming fault, tolerance semantics, In this section, we'll discuss spark Streaming's behavior in the event of a node error event., To understa

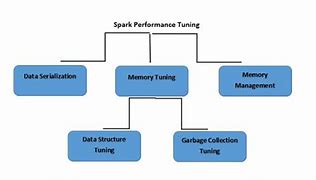

Spark Streaming memory tuning

May 17, 2021 17:00 0 Comment Spark Programming guide

Memory tuning, Memory tuning, Memory tuning, Adjusting memory usage and garbage collection behavior for Spark applications are, detailed in the Spark, Optimization Guide., In this

Spark Streaming sets the correct batch capacity

May 17, 2021 17:00 0 Comment Spark Programming guide

Set the correct batch capacity, Set the correct batch capacity, Set the correct batch capacity, In order for the Spark Streaming application to run steadily in the cluster, the system should be able to process the

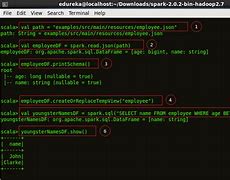

Spark SQL RDDs

May 17, 2021 18:00 0 Comment Spark Programming guide

RDDs, RDDs, RDDs, Spark supports two ways to convert existing RDDs to SchemaRDDs. T, he first method uses reflection to infer patterns (schemas) that contain RDDs

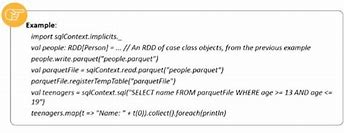

Spark SQL parquet file

May 17, 2021 18:00 0 Comment Spark Programming guide

Parquet file, Parquet file, Parquet file, Parquet is a columnar format that can be supported by many other data processing systems., Spark SQL provides the ability to read and wr

Spark SQL JSON dataset

May 17, 2021 18:00 0 Comment Spark Programming guide

Spark SQL JSON dataset, Spark SQL JSON dataset, Spark SQL, JSON dataset, Spark SQL is able to automatically infer the pattern of the JSON dataset and load it as a SchemaRDD., This transformation can

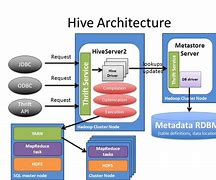

Spark SQL Hive table

May 17, 2021 18:00 0 Comment Spark Programming guide

Hive table, Hive table, Hive table, Spark SQL also supports reading and writing data from Apache Hive. H, owever, Hive has a large number of dependencies, so it is not includ

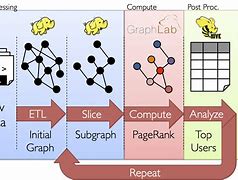

GraphX programming guide

May 17, 2021 18:00 0 Comment Spark Programming guide

GraphX programming guide, GraphX programming guide, GraphX programming guide, GraphX is a new (alpha) Spark API for the calculation of graphs and parallel diagrams. G, raphX extends Spark RDD by, introd

Spark GraphX starts

May 17, 2021 18:00 0 Comment Spark Programming guide

Begin, Begin, Begin, The first step is to introduce Spark and GraphX into your project, as shown below, mport org.apache.spark._, import org.apache.spark.graphx._,