MongoDB shrapned

May 17, 2021 MongoDB

Table of contents

MongoDB shrapned

Sharding

Another cluster that exists in Mongodb is the shrapned technology, which can meet the needs of MongoDB's massive increase in data volume.

When MongoDB stores large amounts of data, a machine may not be enough to store data and provide acceptable read and write throughput. A t this point, we can split the data on multiple machines, allowing the database system to store and process more data.

Why use shrapned

- Copy all writes to the primary node

- Delayed sensitive data is queried at the primary node

- A single replica set is limited to 12 nodes

- Out-of-memory occurs when the request volume is large.

- There are not enough local disks

- Vertical expansion is expensive

MongoDB shrapned

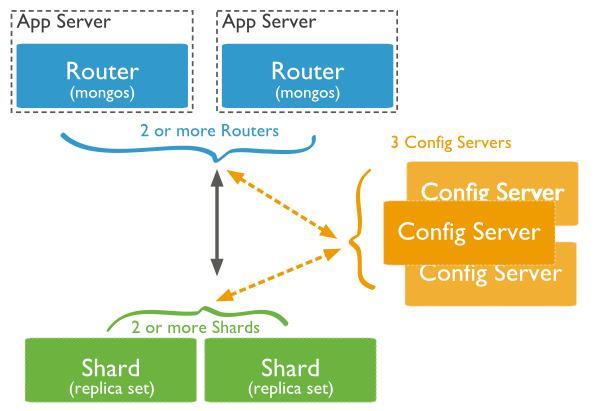

The following illustration shows the distribution using a shrapned cluster structure in MongoDB:

There are three main components in the image above:

-

Shard:

Used to store actual blocks of data, a share server role in a real production environment can be assumed by several machine groups of one relica set to prevent a single point of failure of the host

-

Config Server:

Mongod instance, which stores the entire Cluster Metadata, including chunk information.

-

Query Routers:

Front-end routing, which allows clients to access and makes the entire cluster look like a single database, allows front-end applications to be used transparently.

A shrapned instance

The distribution of the shrapned structure ports is as follows:

Shard Server 1:27020 Shard Server 2:27021 Shard Server 3:27022 Shard Server 4:27023 Config Server :27100 Route Process:40000

Step 1: Start Shard Server

[root@100 /]# mkdir -p /www/mongoDB/shard/s0 [root@100 /]# mkdir -p /www/mongoDB/shard/s1 [root@100 /]# mkdir -p /www/mongoDB/shard/s2 [root@100 /]# mkdir -p /www/mongoDB/shard/s3 [root@100 /]# mkdir -p /www/mongoDB/shard/log [root@100 /]# /usr/local/mongoDB/bin/mongod --port 27020 --dbpath=/www/mongoDB/shard/s0 --logpath=/www/mongoDB/shard/log/s0.log --logappend --fork .... [root@100 /]# /usr/local/mongoDB/bin/mongod --port 27023 --dbpath=/www/mongoDB/shard/s3 --logpath=/www/mongoDB/shard/log/s3.log --logappend --fork

Step 2: Start Confeg Server

[root@100 /]# mkdir -p /www/mongoDB/shard/config [root@100 /]# /usr/local/mongoDB/bin/mongod --port 27100 --dbpath=/www/mongoDB/shard/config --logpath=/www/mongoDB/shard/log/config.log --logappend --fork

Note: Here we can start like a normal mongodb service without adding - shardsvr and confegsvr parameters. S ince these two parameters are designed to change the boot port, we can specify the port ourselves.

Step 3: Start Route Process

/usr/local/mongoDB/bin/mongos --port 40000 --configdb localhost:27100 --fork --logpath=/www/mongoDB/shard/log/route.log --chunkSize 500

In the mongos boot parameters, chunkSize is used to specify the size of chunk in MB, the default size is 200MB.

Step 4: Configure Harding

Next, let's log in to Mongos using the MongoDB Shell and add the Shard node

[root@100 shard]# /usr/local/mongoDB/bin/mongo admin --port 40000

MongoDB shell version: 2.0.7

connecting to: 127.0.0.1:40000/admin

mongos> db.runCommand({ addshard:"localhost:27020" })

{ "shardAdded" : "shard0000", "ok" : 1 }

......

mongos> db.runCommand({ addshard:"localhost:27029" })

{ "shardAdded" : "shard0009", "ok" : 1 }

mongos> db.runCommand({ enablesharding:"test" }) #设置分片存储的数据库

{ "ok" : 1 }

mongos> db.runCommand({ shardcollection: "test.log", key: { id:1,time:1}})

{ "collectionsharded" : "test.log", "ok" : 1 }

Step 5: The program code does not need to change much, directly as the connection to the normal mongo database, the database connection to interface 40000