Front-end leader advanced guide - nginx

Jun 01, 2021 Article blog

Table of contents

3. Thinking: How does Nginx do hot deployments?

4. Thinking: How does Nginx handle high-concurring and efficient processing?

5. Thinking: What if Nginx hangs up?

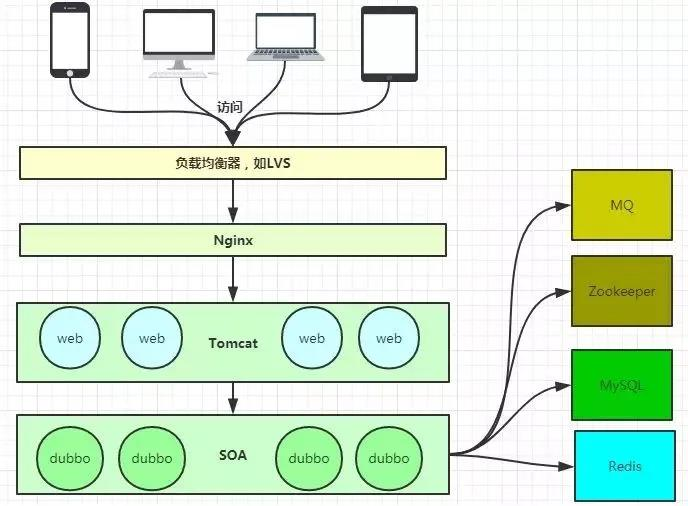

Nginx is a lightweight web server, a reverse proxy server, which is widely used in Internet projects due to its low memory footprint, extremely fast start-up, high concurrian capabilities, and high concurring capabilities.

The figure above basically illustrates the current popular technology architecture, where Nginx has a bit of an entrance gateway flavor.

Reverse proxy server?

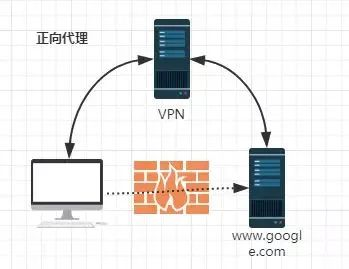

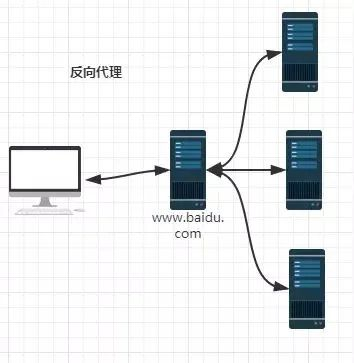

Often hear people say some terms, such as reverse proxy, then what is a reverse proxy, what is a forward proxy?

Forward agent:

Reverse agent:

Because of the firewall, we can't access Google directly, so we can do it with a VPN, which is a simple example of a forward proxy. Here you can see that the forward agent "agent" is the client, and the client knows the target, and the target is not aware that the client is accessed through a VPN.

When we access Baidu in the external network, in fact, will carry out a forwarding, agent to the intranet to go, this is called reverse agent, that is, reverse agent "agent" is the server side, and this process for the client is transparent.

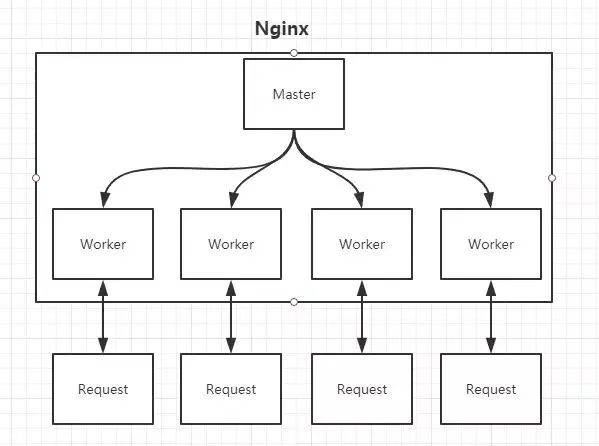

Nginx's Master-Worker mode

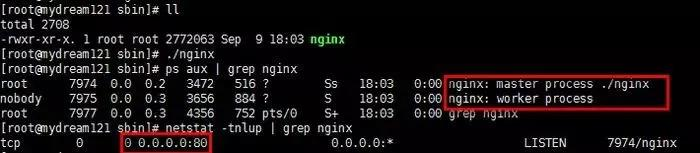

After

Nginx

is started, the Socket service is actually started on port 80 for listening, as shown in the figure,

Nginx

involves

Master

and

Worker

processes.

What does the Master process do?

Read and validate the profile nginx.conf;

What is the role of the Worker process?

Each Worker process maintains a thread (avoids thread switching), processes connections and requests, notes that the number of Worker processes is determined by the configuration file, generally related to the number of CPUs (facilitates process switching), and configures several Worker processes.

Thinking: How does Nginx do hot deployments?

The so-called hot deployment, that is, the configuration file

nginx.conf

modified, do not need

stop Nginx

do not need to interrupt the request, can let the profile take effect!

(nginx -s reload reload/nginx -t check configuration/nginx -s stop)

As we already know above,

worker

process is responsible for handling specific requests, so if you want to achieve the effect of a hot deployment, you can imagine:

Scenario 1:

After modifying the profile

nginx.conf

the master

master

the main process is responsible for pushing the

woker

process to update the configuration information, and when

woker

process receives the information, update the thread information within the process.

(A little valatile taste)

Scenario II:

After modifying the configuration file

nginx.conf

the new

worker

process is regenerated, of course, the request is processed with the new configuration, and the new request must be handed over to the new

worker

process, as for the old

worker

process, and so on, after processing those previous

kill

off.

Nginx

uses Scenario 2 to achieve a hot deployment!

Thinking: How does Nginx handle high-concurring and efficient processing?

As mentioned above,

Nginx

number of

worker

is bound to

CPU

and the

worker

process contains a thread within the efficient loopback processing request, which does contribute to efficiency, but it is not enough.

As professional programmers, we can open brain holes:

BIO/NIO/AIO

asynchronous/synchronous, blocking/non-blocking...

To process so many requests at the same time, be aware that some requests require

IO

which can take a long time, and if you wait for it, you slow down the

worker

processing.

Nginx

uses

Linux

epoll

epoll

which is based on an event-driven mechanism that monitors whether multiple events are ready and, if OK, puts them in

epoll

queue, asynchronous.

worker

only need to loop through

epoll

queue.

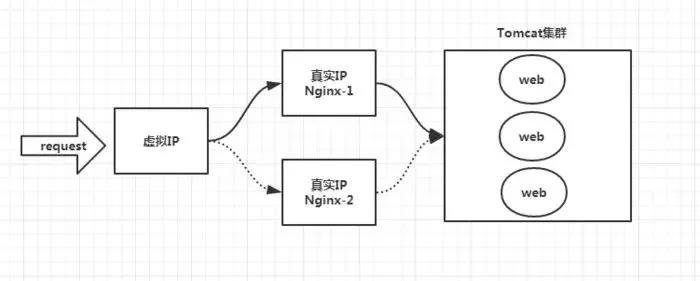

Thinking: What if Nginx hangs up?

Nginx

is important as an entry gateway, it is clearly unacceptable if there is a single point of problem.

The answer is:

Keepalived+Nginx

is highly available.

Keepalived

is a highly available solution that is primarily designed to prevent a single point of server failure and can be used in conjunction with

Nginx

to achieve high availability of

Web

services.

(In fact,

Keepalived

can work not only with

Nginx

but also with many other services)

Keepalived-Nginx implements highly available ideas:

First: Request not to hit

Nginx

directly, should first go through

Keepalived

(this is called virtual IP, VIP)

Second:

Keepalived

should be able to monitor the life state of

Nginx

(provides a user-defined script that periodically checks the Nginx process state for weight changes to enable Nginx failover)

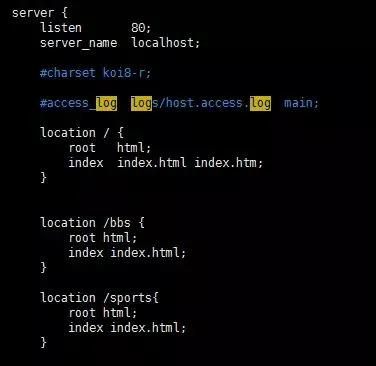

Our main battleground: nginx.conf

A lot of times, in a development and test environment, we have to configure

Nginx

ourselves, which is to configure

nginx.conf

nginx.conf

is a typical segmented profile, let's analyze it below.

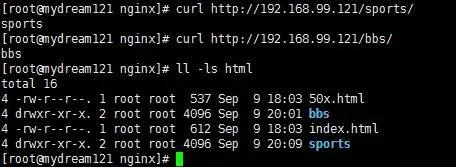

The virtual host

In fact, this is to use

Nginx

as a

web server

to handle static resources.

First:

location

can make regular matches, and you should pay attention to several forms of regularity and priority.

(Not expanded here)

Second: One of the features of

Nginx

ability to increase speed is the dynamic separation, which places static resources on

Nginx

is managed by

Nginx

and dynamically forwards requests to the back end.

Third: We can attribute static resources and log files under

Nginx

to different domain names (that is, directories) for easy management and maintenance.

Fourth:

Nginx

can have

IP

access control, some e-commerce platforms can be in

Nginx

layer, do a processing, built-in blacklist module, then do not have to wait for the request through

Nginx

to reach the back end in intercept, but directly at

Nginx

layer to deal with.

Reverse Agent (proxy_pass)

The so-called reverse proxy, very simple, is actually in the

location

configuration of

root

replaced with

proxy_pass

root

description is a static resource that can be returned by

Nginx

while

proxy_pass

description is a dynamic request that needs to be forwarded, such as on a proxy to

Tomcat

The reverse proxy, as mentioned above, is transparent, such as

request -> Nginx -> Tomcat

so it is important to note that for

Tomcat

the requested IP address is the address of

Nginx

not the real

request

address.

Fortunately,

Nginx

not only reverses proxy requests, but also allows users to customize

HTTP HEADER

Load Balancing (upstream)

In the reverse proxy above, we specify

Tomcat

address by

proxy_pass

and obviously we can only specify one

Tomcat

address, so what if we want to specify multiple to achieve load balancing?

First,

upstream

defines a set of

Tomcat

and specifies load policies (IPHASH, weighted arguments, minimum connections), health check policies (Nginx can monitor the status of this set of Tomcats), and so on.

Second, replace

proxy_pass

with the value specified by

upstream

What can be the problem with load balancing?

The obvious problem with load balancing is that a request that can go to

A server

or to

B server

is completely out of our control, and of course it's not a problem, but we have to be aware that the user's state is saved, such as

Session

session information, that can't be saved to the server.

cache

Caching, provided by

Nginx

provides a mechanism to speed up access, to put it bluntly, is configured to be an on, while specifying a directory so that the cache can be stored on disk.

For specific configurations, you can refer to the official

Nginx

documentation, which is not available here.

Source: Public Number - Frontman

Here's a look at

W3Cschool编程狮

introduction to front-end lead-up guide-nginx, and I hope it will help you.