HashMap talked to the interviewer for half an hour

Jun 01, 2021 Article blog

HashMap contains a lot of knowledge and is well suited to the Java foundation used to examine interviewers, so this is a must-have for Java interviews.

Scene play

Interviewer: Let's introduce yourself first!

Angela: I'm Angela, one of the three in the grass, the strongest single (Zhong Qi doesn't accept it)! Oh, no, it's serial, I'm a slug, and I'm doing -- system development right now.

Interviewer: Look at the familiar Java collection on your resume, has HashMap used it?

Angela: Used. (or familiar taste)

Interviewer: So you tell me about HashMap's internal data structure?

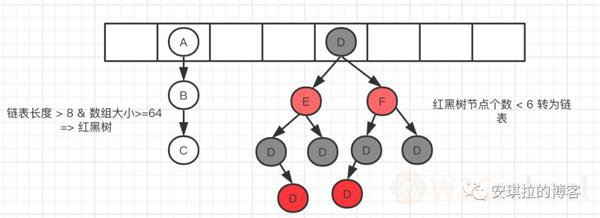

Angela: I'm currently using the JDK 1.8 version, which uses an array of internal links / red and black trees;

Angela: Let me draw you a data structure:

Interviewer: Do you know how HashMap's data is inserted?

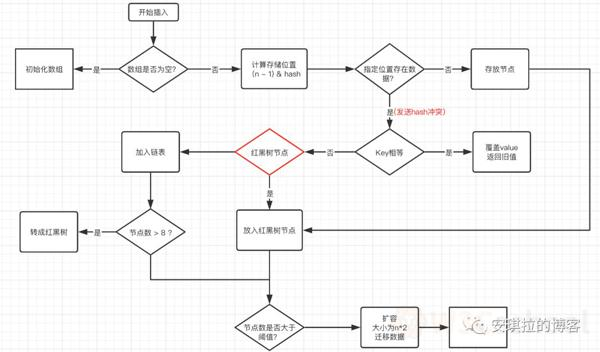

Angela: Well, it's meditative. I think it is better to draw a picture more clearly, as follows:

1. Determine whether the array is empty and initialize it.

2. Not empty, calculate the hash value of k, and calculate the subscript index that should be stored in the array by (n - 1) and hash calculation;

3. Check to see if there is data in table(index) and construct a Node node stored in table(index) without data;

4. The existence of data indicating a hash conflict, continue to determine whether key is equal, equal, and replace the original data with a new value (onlyIfAbsent for false);

5. If not equal, determine whether the current node type is a tree node, if it is a tree node, create a tree node inserted into the red and black tree;

6. If it is not a tree node, create a normal Node to join the list;

7. After the insertion is complete, determine whether the current number of nodes is greater than the threshold, if it is greater than twice the original array.

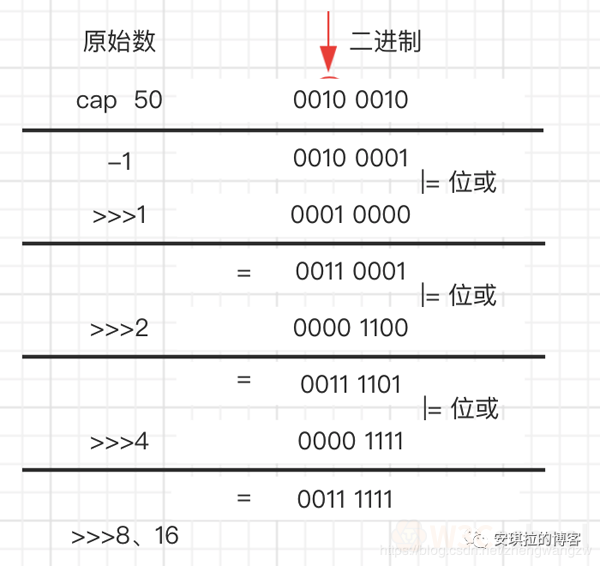

Interviewer: Just now you mentioned the initialization of HashMap, how does HashMap set the initial capacity size?

Angela: "This is also a problem?? " Generally if new HashMap() does not pass values, the default size is 16, the load factor is 0.75, if you pass in the initial size k, the initial size is greater than k 2 integers, for example, if pass 10, the size is 16. (Additional note: Implementation code as follows)

static final int tableSizeFor(int cap) {

int n = cap - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

} Additional note: The following diagram is a detailed process, the algorithm is to let the initial binary move 1,2,4,8,16 bits to the right, with their own difference or, the high first number of 1 through continuous right shift, the high position of 1 after all into 1,111111 , 1 , 1000000 , ( meets the integer power of greater than 50 and is 2 )

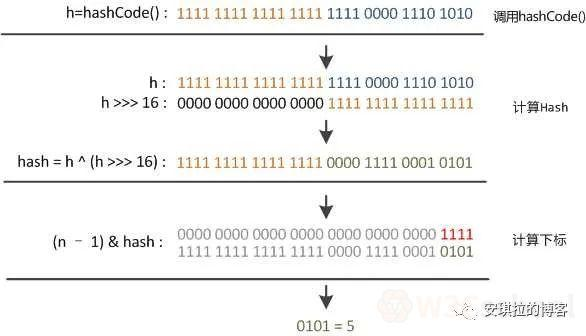

Interviewer: You mentioned the hash function, do you know how HashMap's hash function is designed?

Angela: The hash function is to get the hashcode through key, the int value of 32 bits, and then make the hashcode high 16 bits and lower 16 bits to do different or operation.

Interviewer: Do you know why it's so designed?

Angela: There are two reasons why this, also known as the perturbation function, is designed for two reasons:

Be sure to minimize hash collisions, the more scattered the better; The algorithm must be as efficient as possible, because this is a high-frequency operation, so bit operation is used; Interviewer: Why is the high 16-bit high and low 16-bit different or can reduce the hash collision using hashcode? Can the hash function use key's hashcode directly?

"It's a bit of a trick, Angela almost exploded in place, and I can't wait to get out of the biubiu 213."

Angela: Because the key.hashCode() function calls the hash function that comes with the key key value type, it returns the int hash value. I nt values range from -2147483648 to 2147483647, which adds up to about 4 billion mapping spaces. A s long as the hash function is well mapped loosely, it is difficult to collide in general application. B ut the problem is a 4 billion-length array, and memory can't be put down. You think that if the initial size of the HashMap array is only 16, you need to mold the length of the array before you can use the resulting remainder to access the array subscript.

The mode operation in the source code is to do a "and" operation of hash value and array length-1, and the bit operation is faster than % operation.

bucketIndex = indexFor(hash, table.length);

static int indexFor(int h, int length) {

return h & (length-1);

} By the way, this also explains why HashMap's array length takes an integer power of 2. B ecause this (array length-1) is exactly equivalent to a "low mask". T he result of the And operation is that the highs of the hash values are all zeroed, leaving only the low values for array subscript access. I n the case of the initial length of 16, 16-1 is 15. T he 2-in representation is 000000000 00000000 0000111. The "and" operation with a hash value is as follows, and the result is that the lowest four-digit value is intercepted.

10100101 11000100 00100101

& 00000000 00000000 00001111

----------------------------------

00000000 00000000 00000101 //高位全部归零,只保留末四位 But then the problem comes, so that even if my hash values are loosely distributed, the collision will be serious if only the last few are taken. What's more, if the hash itself doesn't do well, the distribution of the loopholes in the iso-difference column, if you just let the last few lows present regular repetition, it will be extremely painful.

That's when the value of the hash function (the "disturbing function") is reflected, and you should guess at this point. Look at this picture below.

The right displacement of 16 bits, exactly half of 32bit, their own high half and low half of the difference or, in order to mix the high and low of the original hash code, in order to increase the randomness of the low bit. And the mixed lows are mixed with some of the characteristics of the highs, so that the information of the highs is preserved in disguise.

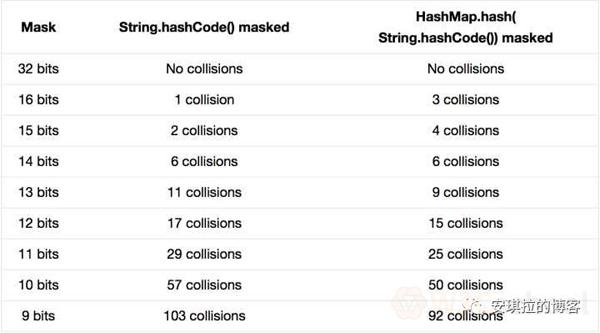

Finally, let's take a look at an experiment in Peter Lawley's column, "An introduction to an optimising a hashing strategy": he randomly selected 352 strings and marked them with an array of low masks, provided that their hash values did not conflict at all.

The results showed that when the HashMap array length was 512 (), that is, when the mask was taken down by 9 bits, 103 collisions occurred without the perturbation function, close to 30%. O nly 92 collisions have been made since the perturbation function was used. C ollisions were reduced by nearly 10 per cent. It seems that perturbation functions do work.

In addition Java 1.8 compared to 1.7 made adjustments, 1.7 made four shifts and four different or different, but obviously Java 8 feel that disturbance to do once is enough, do 4 times, more may not be large marginal utility, so-called for efficiency considerations to change to once.

Here's the hash code for 1.7:

static int hash(int h) {

h ^= (h >>> 20) ^ (h >>> 12);

return h ^ (h >>> 7) ^ (h >>> 4);

} Interviewer: It seems to have done homework, a little material ah! Did you secretly read Angela's blog public number, you just said 1.8 to the hash function has been optimized, 1.8 there are other optimizations?

Angela: 1.8 there are three main optimizations:

1. Arrays and lists have been changed to arrays and lists or red and black trees;

2. The insertion method of the linked list has been changed from head-to-head to the end-plug method, simply speaking, when inserting, if there are already elements in the array position, 1.7 puts the new element into the array, the original node as the successor node of the new node, 1.8 traverses the list, places the element to the end of the list;

3. When expanding capacity 1.7 needs to rehash position the elements in the original array in the position of the new array, 1.8 adopts a simpler judgment logic, the position is unchanged or the index is old capacity size;

4. At insertion, 1.7 first determine whether expansion is required, then insertion, 1.8 insertion first, insertion is completed and then determine whether expansion is required; Interviewer: You tell me why you want to do these optimizations;

Angela: Cough, it's a serial gun.

1. To prevent hash conflicts, the list length is too long, reduce the time complexity from O(n) to O (logn);

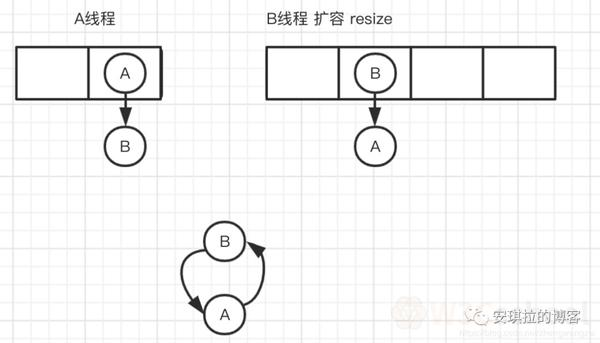

2. Because the 1.7-head insertion method expands capacity, the head-plug method will cause the list to reverse, the multi-threaded environment will produce a ring;

A thread in the insertion node B, B thread is also inserted, encountered insufficient capacity to start expansion, rehash, place elements, the use of head plug method, and then traversed to the B node into the head, thus forming a ring, as shown in the following image:

The amplification of 1.7 calls the transfer code as follows:

void transfer(Entry[] newTable, boolean rehash) {

int newCapacity = newTable.length;

for (Entry<K,V> e : table) {

while(null != e) {

Entry<K,V> next = e.next;

if (rehash) {

e.hash = null == e.key ? 0 : hash(e.key);

}

int i = indexFor(e.hash, newCapacity);

e.next = newTable[i]; //A线程如果执行到这一行挂起,B线程开始进行扩容

newTable[i] = e;

e = next;

}

}

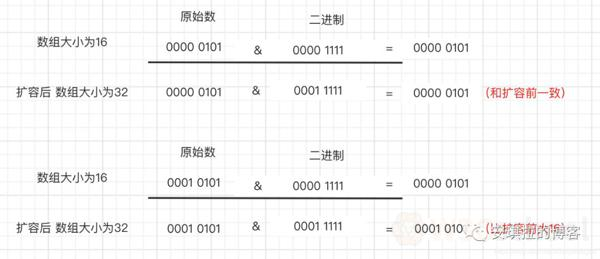

} 3. Why 1.8 can directly locate the original node in the new data without rehash expansion?

This is because the amplification is twice the size of the original array, and the mask used to calculate the array position is only one more high 1, for example: the length before expansion is 16, which is used to calculate the binary n-1 of (n-1) and hash - 1 is 0000 1111,

The binary after expansion to 32 is an extra 1 high, and the > is 0001 1111.

4. Because it is the operation, 1 and any number is itself, it is divided into two situations, as shown in the following figure: the original data hashcode high 4th place 0 and high is 1 case;

The fourth high is 0, the rehash value remains unchanged, the fourth bit is 1, and the rehash value is 16 (the capacity of the old array)

Interviewer: Is HashMap thread safe?

Angela: No, in a multithreaded environment, 1.7 causes problems with dead loops, data loss, data overlays, and data coverage in 1.8.

Take 1.8 as an example, when the A thread executes to the following line 6 to judge the index position is empty, the B thread starts to execute line 7, write node data to the index location, then the A thread resumes the scene, performs the assignment operation, the A thread's data is overwritten;

There's also the 38th line, which also causes multithreaded simultaneous capacity expansion.

final V putVal(int hash, K key, V value, boolean onlyIfAbsent,

boolean evict) {

Node<K,V>[] tab; Node<K,V> p; int n, i;

if ((tab = table) == null || (n = tab.length) == 0)

n = (tab = resize()).length;

if ((p = tab[i = (n - 1) & hash]) == null) //多线程执行到这里

tab[i] = newNode(hash, key, value, null);

else {

Node<K,V> e; K k;

if (p.hash == hash &&

((k = p.key) == key || (key != null && key.equals(k))))

e = p;

else if (p instanceof TreeNode)

e = ((TreeNode<K,V>)p).putTreeVal(this, tab, hash, key, value);

else {

for (int binCount = 0; ; ++binCount) {

if ((e = p.next) == null) {

p.next = newNode(hash, key, value, null);

if (binCount >= TREEIFY_THRESHOLD - 1) // -1 for 1st

treeifyBin(tab, hash);

break;

}

if (e.hash == hash &&

((k = e.key) == key || (key != null && key.equals(k))))

break;

p = e;

}

}

if (e != null) { // existing mapping for key

V oldValue = e.value;

if (!onlyIfAbsent || oldValue == null)

e.value = value;

afterNodeAccess(e);

return oldValue;

}

}

++modCount;

if (++size > threshold) // 多个线程走到这,可能重复resize()

resize();

afterNodeInsertion(evict);

return null;

}Interviewer: So how do you usually solve this thread insecurity?

Angela: There are HashTable, Collections.synchronizedMap, and ConcurrentHashMap in Java for thread-safe Map.

HashTable is directly on the operation method to add the synchronized keyword, lock the entire array, the granularity is relatively large; Collections.syncedMap is an internal class using theCollections collection tool, through the incoming Map encapsulates a SynchronizedMap object, the internal definition of an object lock, the method is implemented through the object lock; C oncurrentHashMap uses segmented locks to reduce lock granularity and increase concurringness. Interviewer: Do you know how Concurrent HashMap's segmented lock works?

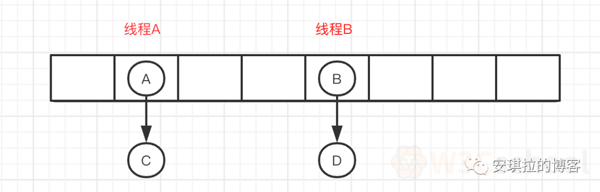

Angela: Oh, my God! Russian set, a set of ConcurrentHashMap member variables using volate retouching, eliminating instruction reordering while ensuring memory visibility, and using CAS operations and syncroned combinations to implement assignment operations, multithreaded operations will only lock the nodes of the current operation index.

As shown below, thread A locks the list where the A node is located, thread B locks the list where the B node is located, and the operation does not interfere with each other.

Interviewer: You mentioned earlier that the list turn red and black tree is the list length to reach the threshold, what is the threshold?

Angela: The threshold is 8 and the red and black tree turn list threshold is 6

Interviewer: Why 8, not 16, 32 or even 7? And why is the threshold for the red and black tree to the linked list 6, not 8?

Angela: You ask the author! Oh, my God, biubiubiu really wants 213 moves.

Because the author designed it this way, oh, no, because, calculated, under the proper design of the hash function, the probability of an 8 hash collision is 6 parts per million, the probability of speaking... Because 8 is enough, as to why the turn back is 6, because if the number of hash collisions hovering around 8, there will always be a chain list and red and black tree transformation, in order to prevent this from happening.

Interviewer: Is hashMap's internal nodes ordered?

Angela: It's out of order, inserted randomly based on the hash value

Interviewer: Is there an orderly Map?

Angela: LinkedHashMap and TreeMap

Interviewer: Tell me how LinkedHashMap is orderly?

Angela: LinkedHashMap maintains a single list with head-to-tail nodes, while linkedHashMap nodes, in addition to inheriting HashMap's Node properties, have before and after to identify front and back nodes. You can implement sorting in the order in which you insert or in the order in which you access them.

/**

* The head (eldest) of the doubly linked list.

*/

transient LinkedHashMap.Entry<K,V> head;

/**

* The tail (youngest) of the doubly linked list.

*/

transient LinkedHashMap.Entry<K,V> tail;

//链接新加入的p节点到链表后端

private void linkNodeLast(LinkedHashMap.Entry<K,V> p) {

LinkedHashMap.Entry<K,V> last = tail;

tail = p;

if (last == null)

head = p;

else {

p.before = last;

last.after = p;

}

}

//LinkedHashMap的节点类

static class Entry<K,V> extends HashMap.Node<K,V> {

Entry<K,V> before, after;

Entry(int hash, K key, V value, Node<K,V> next) {

super(hash, key, value, next);

}

} Sample code:

public static void main(String[] args) {

Map<String, String> map = new LinkedHashMap<String, String>();

map.put("1", "安琪拉");

map.put("2", "的");

map.put("3", "博客");

for(Map.Entry<String,String> item: map.entrySet()){ System.out.println(item.getKey() + ":" + item.getValue());

}

}

//console输出1:安琪拉2:的3:博客 Interviewer: Tell me how TreeMap got organized?

Angela: TreeMap is sorted in Key's natural order or Comprator's order, with red and black trees inside. So either the class to which key belongs implements the Compare interface, or customizes a comparator that implements the Explorer interface and passes it to TreeMap user key for comparison.

Interviewer: As mentioned earlier with the reduction in lock granularity through the combination of CAS and synced, can you tell me about the implementation of CAS and the implementation of synchronized?

Angela: The next issue, OK?

Interviewer: All right, go back and wait for the announcement!