9.1.3 Binding two network cards

May 24, 2021 That's what Linux should learn

In general, the production environment must provide 7×24-hour network transmission services. W ith the help of network card binding technology, not only can improve the network transmission speed, more importantly, can also ensure that one of the network card failure, can still provide normal network services. Suppose we implement binding technology for two network cards so that they can transfer data together in normal operation, making the network transmission faster, and even if one network card suddenly fails, the other network card will be automatically replaced immediately to ensure that the data transmission will not be interrupted.

Let's take a look at how to bind a network card.

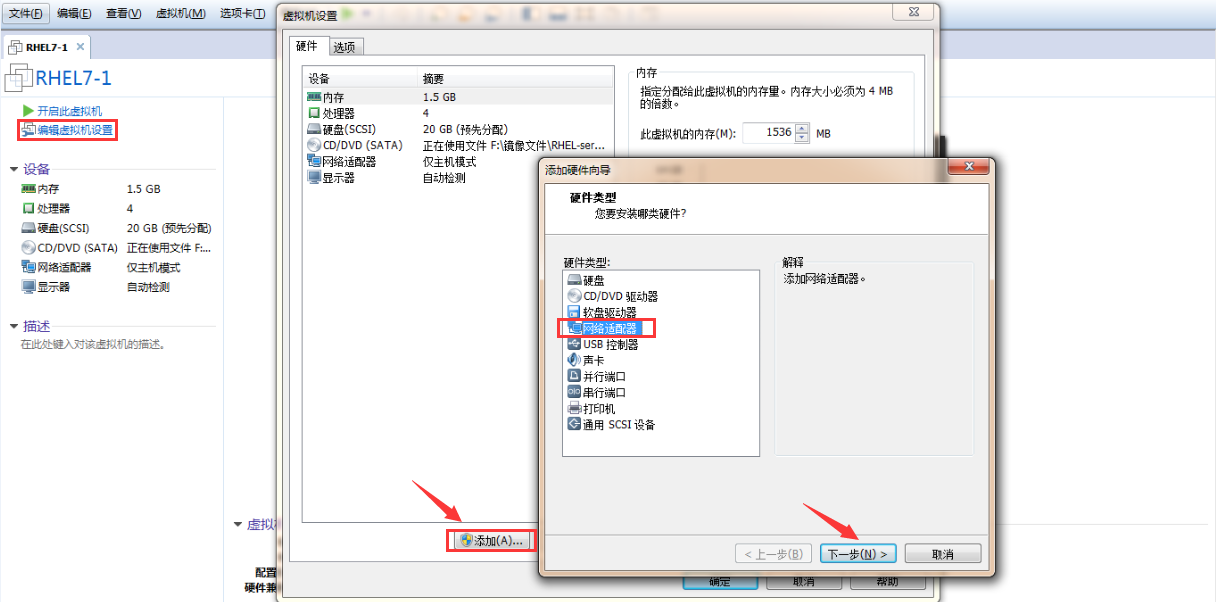

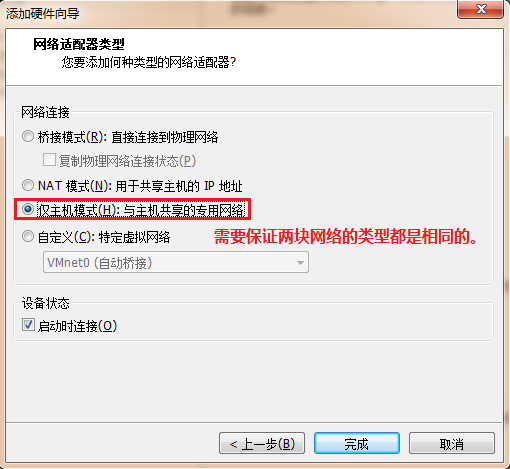

Step 1: Add another network card device to the virtual machine system, make sure that both network cards are in the same network connection (i.e., the network card mode is the same), as shown in Figures 9-10 and 9-11. Network card devices in the same mode can be network card binding, otherwise the two network cards can not transfer data to each other.

Figure 9-10 Add another network card device to the virtual machine

Figure 9-11 Ensure that two network cards are on the same network connection (i.e. the same network card mode)

Step 2: Use the Vim text editor to configure the binding parameters of the network card device. T he theoretical knowledge of network card binding is similar to the previously learned RAID hard disk group, we need to participate in the binding of network card devices one by one "initial settings." I t should be noted that these originally independent network card devices need to be configured as a "subordinate" network card, serving the "main" network card, should no longer have their own IP address and other information. After the initial setup, they can support network card binding.

[root@linuxprobe ~]# vim /etc/sysconfig/network-scripts/ifcfg-eno16777736 TYPE=Ethernet BOOTPROTO=none ONBOOT=yes USERCTL=no DEVICE=eno16777736 MASTER=bond0 SLAVE=yes [root@linuxprobe ~]# vim /etc/sysconfig/network-scripts/ifcfg-eno33554968 TYPE=Ethernet BOOTPROTO=none ONBOOT=yes USERCTL=no DEVICE=eno33554968 MASTER=bond0 SLAVE=yes You also need to name the bound device bond0 and fill in the IP address and other information, so that when the user accesses the corresponding service, it is actually the two network card devices in the joint service.

(root@linuxprobe.) vim /etc/sysconfig/network-scripts/ifcfg-bond0-TYPE-Ethernet BOOTPROTO=none ONBOOT=yes USERCTL=no DEVICE=bond0 IPADDR=1 92.168.10.10 PREFIX s 24 DNS s 192.168.10.1 NM_CONTROLLED s no Step 3: Let the Linux kernel support the network card binding driver. T here are three common network card binding drivers - mode0, mode1, and mode6. Let's take binding two network cards as an example to explain the use of the scenario.

mode0 (Balanced Load Mode): Usually both network cards work and are automatically ready, but port aggregation is required on switch devices connected to the server's local network card to support binding technology.

mode1 (Auto-Preparation Mode): Usually only one network card works and is automatically replaced with another network card after it fails.

mode6 (balanced load mode): Usually both network cards are working, and automatic support, no need for switch equipment to provide auxiliary support.

For example, there is a file server for providing NFS or samba services, which can provide a maximum network transmission speed of 100Mbit/s, but the number of users accessing the server is particularly large, then its access pressure must be great. I n a production environment, the reliability of the network is extremely important, and the transmission speed of the network must be guaranteed. F or such a situation, the better choice is the mode6 network card binding driver mode. Because mode6 allows two network cards to work together at the same time, it provides reliable network transmission assurance when one of the network cards fails and is automatically ready without switch equipment support.

The following uses the Vim text editor to create a driver file for network card binding, enabling the bound bond0 network card device to support binding technology, and defining the network card to bind in mode, with an automatic switching time of 100 milliseconds in the event of a failure.

the root@linuxprobe of vim /etc/modprobe.d/bond.conf alias bond0 bonding options bond0 miimon s 100 mode s6 Step 4: The network card binding operation can be successful after restarting the network service. Normally, only bond0 network card devices will have IP address and other information:

[root@linuxprobe ~]# systemctl restart network [root@linuxprobe ~]# ifconfig bond0: flags=5187<UP,BROADCAST,RUNNING,MASTER,MULTICAST> mtu 1500 inet 192.168.10.10 netmask 255.255.255.0 broadcast 192.168.10.255 inet6 fe80::20c:29ff:fe9c:637d prefixlen 64 scopeid 0x20<link> ether 00:0c:29:9c:63:7d txqueuelen 0 (Ethernet) RX packets 700 bytes 82899 (80.9 KiB) RX errors 0 dropped 6 overruns 0 frame 0 TX packets 588 bytes 40260 (39.3 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eno16777736: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 00:0c:29:9c:63:73 txqueuelen 1000 (Ethernet) RX packets 347 bytes 40112 (39.1 KiB) RX errors 0 dropped 6 overruns 0 frame 0 TX packets 263 bytes 20682 (20.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eno33554968: flags=6211<UP,BROADCAST,RUNNING,SLAVE,MULTICAST> mtu 1500 ether 00:0c:29:9c:63:7d txqueuelen 1000 (Ethernet) RX packets 353 bytes 42787 (41.7 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 325 bytes 19578 (19.1 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 Ping 192.168.10.10 commands can check network connectivity on the local host. I n order to verify the automatic backup function of network card binding technology, we suddenly remove a network card device randomly in the virtual machine hardware configuration, and we can see very clearly the process of network card switching (generally only 1 packet lost). Then another network card will continue to serve the user.

[root@linuxprobe ~]# ping 192.168.10.10 PING 192.168.10.10 (192.168.10.10) 56(84) bytes of data. 6 4 bytes from 192.168.10.10: icmp_seq=1 ttl=64 time=0.109 ms 64 bytes from 192.168.10.10: icmp_seq=2 ttl=64 time=0.102 ms 64 bytes from 192.168.10.10: icmp_seq=3 ttl=64 time=0.066 ms ping: sendmsg: Network is unreachable 64 bytes from 192.168.10.10: icmp_seq=5 ttl=64 time=0.065 ms 64 bytes from 192.168.10.10: icmp_seq=6 ttl=64 time=0.048 ms 64 bytes from 192.168.10.10: icmp_seq= 7 ttl=64 time=0.042 ms 64 bytes from 192.168.10.10: icmp_seq=8 ttl=64 time=0.079 ms=C--- 192.168.10.10 ping statistics --- 8 packets, 7 received, 12% packet loss, time 7006ms rtt min/avg/max/mdev s 0.042/0.073/0.109/0.023 ms problem? Ask bold questions!

Because readers have different hardware or operation errors may lead to experimental configuration errors, please be patient and take a closer look at the operation steps, do not be discouraged

Linux technical exchange please add Group A: 560843 (full), Group B: 340829 (recommended), Group C: 463590 (recommended), click here to view the national group.

This group features: through password verification to ensure that each group member is "Linux should learn" readers, more targeted, from time to time free to receive customized gifts.